On a client project, we wanted to prevent search engines from accessing pages on the site. It needed to be directly linked to from some various sources, public, but not queryable. So we did the reasonable thing. We modified our robots.txt and added our meta tags. Unfortunately, this didn't seem to work as we would expect and pages still showed up in Google searches.

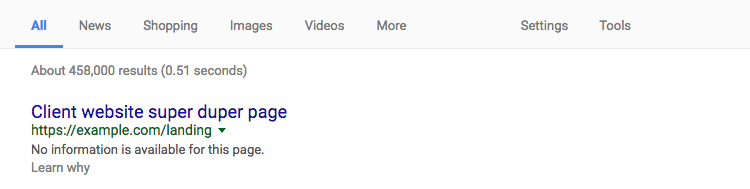

We received reports that links were showing up in Google search results. And they were. With the page title and the description of No information is available for this page.

But... why?

After digging through Google's support documentation, I came across the topic Block search indexing with 'noindex' at https://support.google.com/webmasters/answer/93710?hl=en

Important! For the noindex meta tag to be effective, the page must not be blocked by a robots.txt file. If the page is blocked by a robots.txt file, the crawler will never see the noindex tag, and the page can still appear in search results, for example if other pages link to it.

Well, that is conflicting. If you have a robots.txt available, the bot does not crawl any pages and cannot read meta tags. That makes sense. However, if someone links to your page, without attributing nofollow in their page's anchor tag, it will get indexed. So, they do not respect available meta tags when your content is directly linked. That's cool.

X-Robots-Tag to the rescue?

Then, at the bottom of the article, it states that Google supports an X-Robots-Tag tag. There is no disclaimer here about meta information, so I am assuming this might be our fix all. To add the header I created a response event subscriber. The event subscriber adds our header to the response if it is an HtmlResponse.

Services file

services:

mymodule.response_event_subscriber:

class: \Drupal\mymodule\EventSubscriber\ResponseEventSubscriber

tags:

- { name: 'event_subscriber' }

EventSubscriber class

<?php

namespace Drupal\mymodule\EventSubscriber;

use Drupal\Core\Render\HtmlResponse;

use Symfony\Component\EventDispatcher\EventSubscriberInterface;

use Symfony\Component\HttpKernel\Event\FilterResponseEvent;

use Symfony\Component\HttpKernel\KernelEvents;

/**

* Class ResponseEventSubscriber.

*/

class ResponseEventSubscriber implements EventSubscriberInterface {

/**

* Explicitly tell Google to bugger off.

*

* @param \Symfony\Component\HttpKernel\Event\FilterResponseEvent $event

*/

public function xRobotsTag(FilterResponseEvent $event) {

$response = $event->getResponse();

if ($response instanceof HtmlResponse) {

$response->headers->set('X-Robots-Tag', 'noindex');

}

}

/**

* {@inheritdoc}

*/

public static function getSubscribedEvents() {

$events[KernelEvents::RESPONSE][] = ['xRobotsTag', 0];

return $events;

}

}

Results?

It has only been active for a few days, so I do not know the exact results. I am guessing the client will need to perform specific actions within Google and Bing webmaster consoles.

I'm available for one-on-one consulting calls – click here to book a meeting with me 🗓️

Want more? Sign up for my weekly newsletter